My last post discuss about SQL queries. Nevertheless, sometimes data came from differents databases. In such cases, it is no longer possible to use SQL. ETL tools, which stands for Extract Transform Load are designed to easilly allow data transformations. I have currently used three tools : Talend, SAP Business Objects Data Integrator and Kettle. I will review them and explain one or two tips I’ve learned using ETL tools.

My last post discuss about SQL queries. Nevertheless, sometimes data came from differents databases. In such cases, it is no longer possible to use SQL. ETL tools, which stands for Extract Transform Load are designed to easilly allow data transformations. I have currently used three tools : Talend, SAP Business Objects Data Integrator and Kettle. I will review them and explain one or two tips I’ve learned using ETL tools.

Generalities

The aim of an ETL are numerous. Kimball define 34 subsystems. To be more general I primarily focus on

- gain in speed of development : ETL generally use some visual interface with components in order to fasten the process;

- ease the maintenance/evolution : plain SQL request and programming tend to be quite complex very quickly;

- functionalities : if one of you data base contains XML fields or you want to parse web pages you need to hope that your ETL allows it. If not … very dirty things will happens.

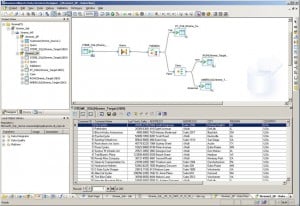

SAP Business Object Data Integrator

It’s the only one with not open source I’ve used (version XI r2). An ETL is composed of Work flow and Data flow. Work flows are the structure as they can contains work flows, data flows and orchestration elements (e.g. if then else block). Data flow only work on data manipulation objects : table, join, switch, … Data Integrator is not designed to let you write complex SQL (it’s possible but not recommended), a table element read a table and that’s all. What is great is that Data Integrator will merge table and join elements to create the complex SQL query if everything can be done in one database (let say in a TEL way), . You quickly end up with many elements in your data flows but no big chunk of SQL. Data Integrator is environment aware, you can define a data source and at run time decide which database it represent (production, dev, …). For the downsides, don’t expect copy paste to work, neither expect to do tricky things.

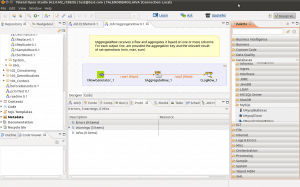

Talend

Talend is an open source product. While Data Integrator has a few very generic components to build, Talend has hundred of components which cover everything you could want. For instance, you have one table input component for each database (even many table input component for one database). No separation between work flow and data flow, only job (but you can have sub jobs). On the downside, it’s amazingly slow to use. I’m also not a big fan that it generate a Java program (it could be very useful in some case but not for a serious process). A lot of bugs in it too (that’s the cost of containing so much).

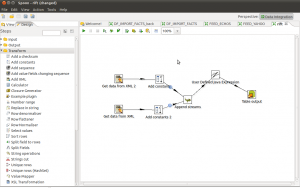

Kettle

Kettle is also an open source product integrated in the Pentaho suite. To describe it, let’s say that if Talend is shiny, marketing enhanced, Kettle is … functional and unpractical. There is again jobs (work flow) and transformations (data flow), while the first one is executed sequentially, the second one is multi-threaded, expect fun to understand. There is many program like Spoon (the designer which could execute both jobs and transformations), Kitchen (execute jobs), Pan (execute transformations). Nevertheless, it’s currently my favorite. When you understand the underlying logic everything is easier and behave as expected. Not as complete as Talend but enough for 99% of what I want and you can always use some Java code for the rest.

How I used ETL in the everyday work

Every times I should extract a big chunk of data I use and ETL (usually a simple input query and an output in a CSV or Excel file). It just takes almost no time and you keep a file with all the process in case you need it later. And of course I use ETL to load the data warehouse. Here is some tips :

- Split stuff : Divide your ETL by subjects (accounting, CRM, …), the higher level vision should be simple.

- Keep it ETL : I always dump all data needed in the staging area, make most transformations in SQL (as everything is in the same database, so one table input and one table output to the data warehouse but no “create table as” statement).

- Make it environment friendly : you should have at least a dev environment and a production environment. Therefore you can test your jobs in the dev environment before putting it in production, the job will not change just the runtime configuration.

Let's stay in touch with the newsletter

October 11, 2013 at 13:09

I must say informative post. As per my point of view if you’re considering about Data manipulation ETL tool then we shouldn’t forget about Informatica tool, which is very popular for Extract,transfer and load. Hope you should discuss about informatica and Datastage.