With the recent release of Pivotal HD, I wanted to check the current state of Hadoop SQL engines. SQL integration is growing in the Hadoop landscape and it’s a good thing for productivity and integration. Despite old and bloated, SQL has still no competition when it comes to data manipulation. I’ve downloaded the latest single node VM from Cloudera and Pivotal and made some tests. Results were quite interesting.

With the recent release of Pivotal HD, I wanted to check the current state of Hadoop SQL engines. SQL integration is growing in the Hadoop landscape and it’s a good thing for productivity and integration. Despite old and bloated, SQL has still no competition when it comes to data manipulation. I’ve downloaded the latest single node VM from Cloudera and Pivotal and made some tests. Results were quite interesting.

I used the following simple query on a fact table. This is a simple group by that require a sequential read of all the data. It is favoring column storage as the query use only 2 columns (while the table is quite wide). It’s a typical analyst query. The used dataset is provided in a 1 million rows and a 10 millions rows so it’s a toy dataset but we can still derive some thoughts.

select product_category_name, count(*) cnt from order_lineitems group by product_category_name order by cnt desc limit 10;

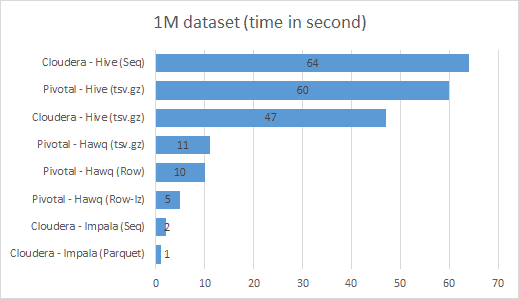

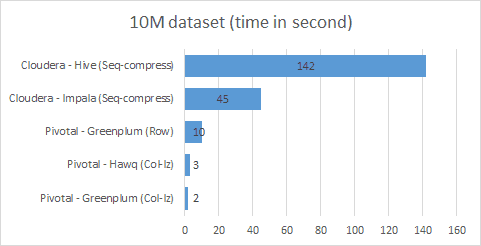

You can find performance of each engine in the following charts. Notice that for the same engine there might be different results because it can handle data in many shapes. I didn’t make all possible comparisons but the only I really miss is the Cloudera Impala using Parquet over the 10M dataset. It didn’t work because of some OutOfMemory exceptions during the dataset creation. The Pivotal-Greenplum hardware was a 2GB VM using 2 cores (2 segments and one master) while the other test where running on a 4GB VM with a single thread but at higher frequency.

Map/Reduce is SLOOOOWWWWWW

Either Cloudera or Pivotal version of Hive is terribly slow. The issue is obviously the need to materialize every step on the hard drive. It give you restartability and scalability but the cost is way too high. You need 10 times more hardware to achieve the same result (which makes it 10 times more likely to fail). The Cloudera flavor is a bit faster but the execution process was similar so I draw no conclusion here.

I didn’t have a comparison between Sequence compressed and tsv compressed. It would favor the Sequence format. But enabling compression in Hive is disappointing (not a table or tablespace attribute but a session parameter) so I didn’t dig much.

Hawq and Impala are great products

Hawq is in line with Greenplum.I could have dig much, but overall the order of magnitude is the same and that’s all we need to know. Pivotal HD with Hawq is therefore amazing as it has the performances of Greenplum with a great integration with Hadoop.

Impala behave strangly on the 1M dataset (maybe the dataset was cached in memory), but regarding the 10M dataset data it seems to behave like Hawq. I didn’t expected such great results considering how Impala is young. While performances are great, the functionnality side is weaker. First, the SQL is weak (at the level of Hive) and you can’t currently use tsv.gz file as input which is a odd limit (as it’s really convenient to store data this way in HDFS).

Conclusion

I was quite impressed by Pivotal HD/Hawq. On one hand, it gives you high performance when you use compressed columnar storage. On the other hand, you keep flexibility to use standard flat files. I didn’t tested the HBase integration but if that work, you have the perfect data stack for most analytics usages. The drawback of Pivotal is the marketing story. Hawq is not provided in the community package.

Impala is very interesting too. It need to grow from a feature perspective but the performance side is already there.

PS : I didn’t test Hortonworks, but I keep good faith in their Stinger initiative to make Hive first class again. In fact, for the long run I would bet on it.

Let's stay in touch with the newsletter